About me

I am Caiwen Ding, an Associate Professor in the Department of Computer Science & Engineering at the University of Minnesota, Twin Cities (since Aug 2024). Previously, I was an assistant professor at the University of Connecticut. I received my Ph.D. from Northeastern University (NEU), Boston in 2019, supervised by Prof. Yanzhi Wang.

My research interests include algorithm-system co-design of ML/AI; computer architecture and heterogeneous computing (FPGAs/GPUs); privacy-preserving machine learning; machine learning for electronic design automation (EDA); neuromorphic computing; computer vision and natural language processing.

My work has been published in top-tier venues including DAC, ICCAD, ASPLOS, ISCA, MICRO, HPCA, CCS, Oakland, SC, FPGA, MLSys, NeurIPS, ICML, ICLR, CVPR, AAAI, ACL, EMNLP, IJCAI, ICRA, and DATE. I am a recipient of the NSF CAREER Award, Amazon Research Award, and CISCO Research Award. I received the Best Paper Award at 2025 ICLAD, Outstanding Student Paper Award at 2023 HPEC, Best Paper Award at 2023 AAAI DCAA workshop, and Best Paper Award Nominations at DATE 2018 and 2021.

News

- [Feb, 2026] One paper accepted by DAC 2026

- [Jan, 2026] Honored to receive a Gift grant from Adobe

- [Jan, 2026] Two papers accepted by ICLR 2026

- [Jan, 2026] Honored to receive a Gift Grant from Amazon

- [Dec, 2025] Honored to receive a Research Grant from DOE/LLNL

- [Sep, 2025] Two papers accepted by NeurIPS 2025

- [Aug, 2025] Honored to receive a Research Grant from NSF

- [Jul, 2025] Two papers accepted by ICCAD 2025

- [Jun, 2025] HiVeGen won Best Paper Award at ICLAD 2025!

- [May, 2025] Amit starts summer intern at Adobe Research. Congrats!

- [May, 2025] Xi starts summer intern at Nvidia. Congrats!

- [Apr, 2025] Two papers accepted to ICS 2025

- [Apr, 2025] One paper accepted to Scientific Reports

- [Mar, 2025] Honored to receive a CISCO Research Award

- [Mar, 2025] Workshop proposal 'DCgAA 2025: International Workshop on DL-Hardware Co-Design for Generative AI Acceleration' accepted to DAC 2025

- [Feb, 2025] One paper accepted to CVPR 2025

- [Feb, 2025] Honored to receive a Research Grant from DOE Lawrence Livermore National Laboratory as PI

- [Jan, 2025] One paper accepted to ICLR 2025

- [Dec, 2024] Hongwu successfully defended and will join Adobe Research as Research Scientist. Congratulations, Dr. Peng!

- [Nov, 2024] Honored to receive a Research Grant from DOE

- [Oct, 2024] Team won third place at the 2024 ICCAD Contest on LLM-Assisted Hardware Code Generation

- [Sep, 2024] One paper (MACM) accepted to NeurIPS 2024. Ranked #1 on Math Word Problem Solving on MATH

- [Sep, 2024] Shanglin successfully defended and will join Walmart AI Lab as Senior Data Scientist. Congratulations, Dr. Zhou!

- [Sep, 2024] Honored to receive a Research Grant from DOT Safe Streets and Roads for All (SS4A) Grant Program

- [Aug, 2024] Caiwen joins as Associate Professor at University of Minnesota Twin Cities

- [Aug, 2024] Honored to receive a Research Grant from NIH/NCI

- [Jul, 2024] One paper accepted to ECCV 2024

- [Jun, 2024] One paper accepted to ICCAD 2024

- [May, 2024] Kiran: Predoctoral Honorable Mention; Jiahui: Predoctoral Fellowship; Hongwu: Predoctoral Prize for Research Excellence; Shaoyi: Buckman Fellowship; Shanglin: Predoctoral Fellowship; Amit: Ammar Fellowship

- [May, 2024] Hongwu starts summer intern at Adobe Research. Congrats!

- [May, 2024] Shaoyi selected as 2024 ML and Systems Rising Star. Congrats!

- [May, 2024] Shaoyi will join as Tenure-Track Assistant Professor at Stevens Institute of Technology. Congrats!

- [Apr, 2024] Honored to receive a Research Grant from Connecticut Cooperative Transportation Research Program

- [Apr, 2024] One paper accepted by ICS 2024

- [Mar, 2024] Honored to receive an Amazon Research Award

- [Mar, 2024] One paper accepted by ICPE 2024

- [Feb, 2024] Honored to receive the NSF CAREER Award

- [Feb, 2024] Honored to receive a CISCO Research Award

- [Dec, 2023] Honored to receive a Research Grant from DOE Lawrence Livermore National Laboratory as PI

- [Nov, 2023] One paper accepted by 2024 DATE

- [Nov, 2023] One paper accepted by 2024 ASPLOS

- [Oct, 2023] One paper accepted by 2024 HPCA

- [Sep, 2023] One paper accepted by 2023 NeurIPS

- [Aug, 2023] One paper at HPEC received Outstanding Student Paper Award. Congrats to Bin!

- [Jul, 2023] One paper accepted by 2023 MICRO

- [Jul, 2023] One paper accepted by 2023 ICCV

- [Jun, 2023] One paper accepted by 2023 SC

- [Apr, 2023] One paper accepted by 2023 ICML

- [Apr, 2023] One paper accepted by 2023 IJCAI

- [Mar, 2023] One paper accepted by 2023 IEEE S&P (Oakland)

- [Feb, 2023] Two papers accepted to CVPR 2023

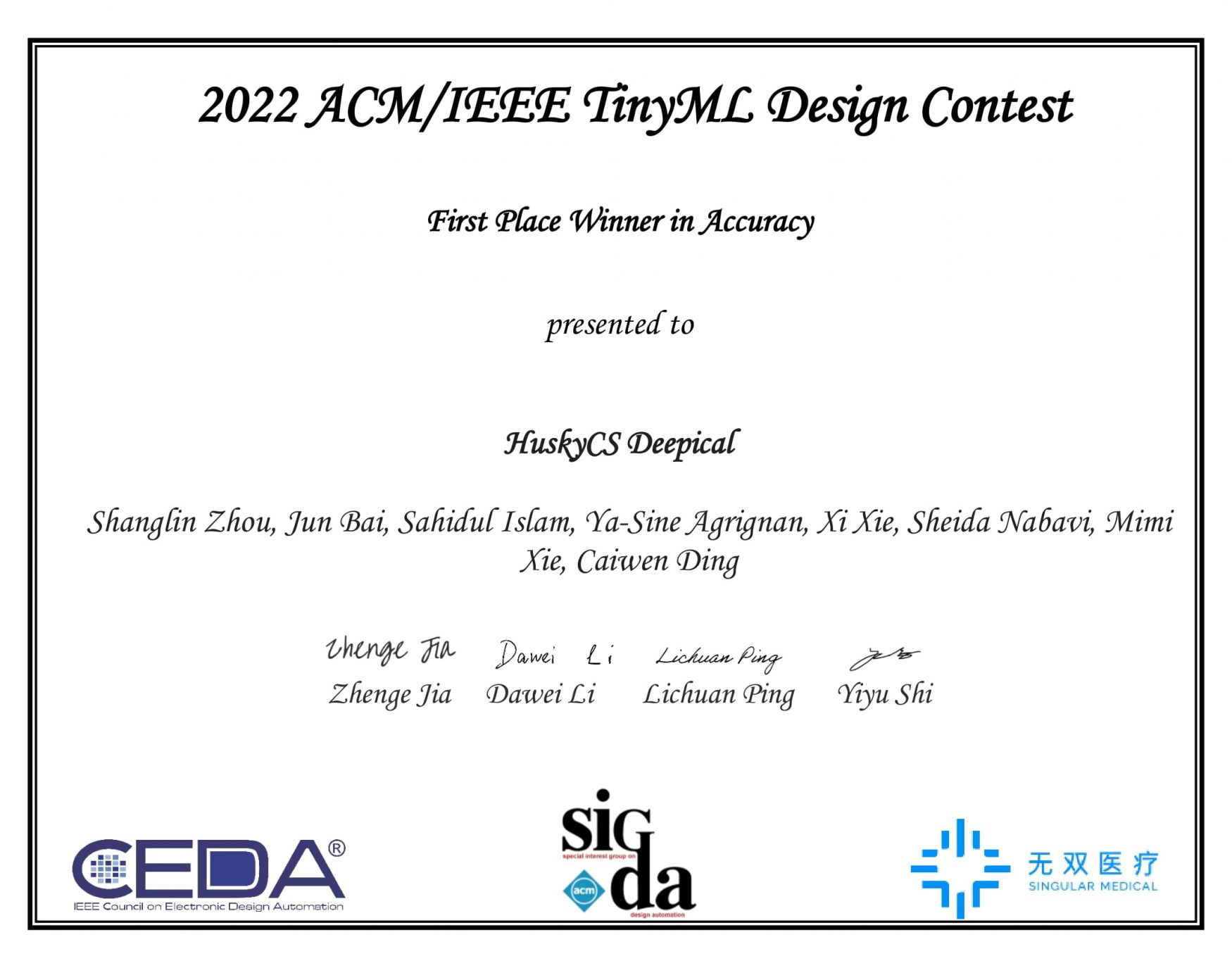

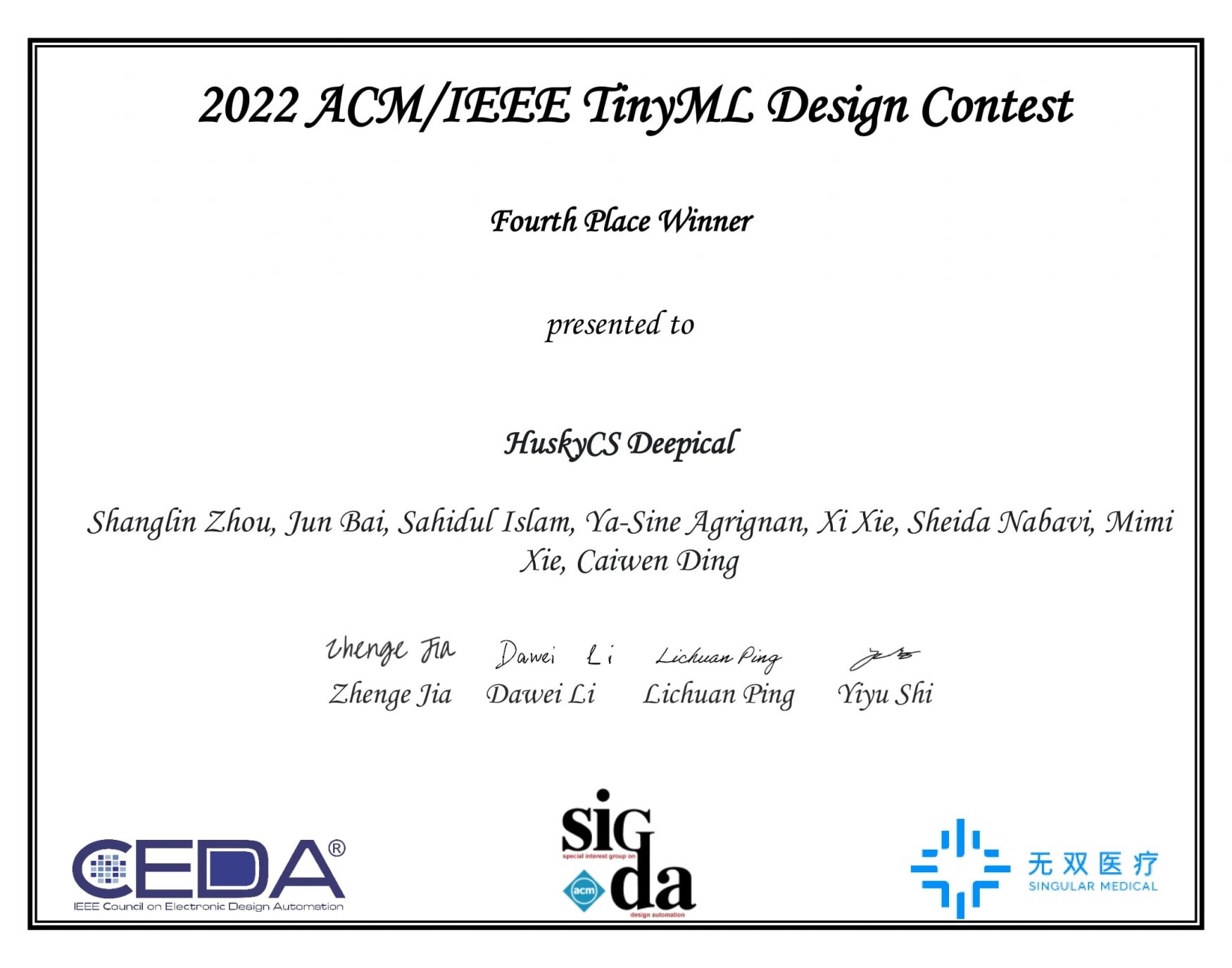

Selected Achievements

Research Areas

Research Sponsors